Product testing and review is a very important step in convincing people to buy, not to buy a certain product (it is viewed by customers as more credible than marketing aimed directly at them, because the perception is that they are not “bought”, even though vendors pay for many tests!). If the organization / individual doing the testing wants to be ethical, she needs to ensure that the test is as relevant as possible (this means that test scores should be as close indicators for the real-world performance of the product as possible). Then again, many (all?) testing venues have different level of time / money constraints, which they must balance.

Product testing and review is a very important step in convincing people to buy, not to buy a certain product (it is viewed by customers as more credible than marketing aimed directly at them, because the perception is that they are not “bought”, even though vendors pay for many tests!). If the organization / individual doing the testing wants to be ethical, she needs to ensure that the test is as relevant as possible (this means that test scores should be as close indicators for the real-world performance of the product as possible). Then again, many (all?) testing venues have different level of time / money constraints, which they must balance.

Going from the general to the particular, here are some security related tests and my opinion about them:

- The latest av-comparatives – as always – it contains some high-quality information and some good remarks. The sad thing is that the rest of the testing industry doesn’t have such high standards. One thing I found especially valuable was the remark that “real-time updating” technologies (like Artemis from McAfee) need to be taken into account when evaluating the detection rates. However, the “front-page” scores were those with this technology enabled (probably to avoid criticism from the company), which means that it wasn’t entirely an apples-to-apples comparison. It also raises some questions regarding the evaluation criteria: (a) what about retroactive tests? will these capabilities be enabled or disabled during them? if they will be disabled, won’t vendors object to it? (b) if the “in-the-cloud” system performs some automatic detection (ie. “this file has been seen at more than 100 people, so I declare it bad”), how does the test quantify the risk of being in the first 100 people?

- As a counter-example: AV-Test did a test for PCWorld which included “passive rootkits”. This means that the capabilities of the products to disinfect already infected systems (which is arguably a very important scenario) is never tested. As a friend puts it: If I see the phrase "passive rootkits" (as opposed to "active rootkits") one more time in AV tests (or elsewhere, for that matter), I’ll snap. To add insult to injury, the linked PCWorld article doesn’t list the tested products in any obvious order (ie. decreasing by the score or alphabetical). This makes me believe that the listing order is somehow influenced by monetary reasons (direct payment or advertising revenue)

- A somewhat-in-the-middle example: the “Web Browser Security – Protection Against Socially Engineered Malware” test from NSS Labs (this was controversial because Microsoft sponsored it and IE8 came out at the top by a long shot). The positive aspects of the test: it was quite transparent (both the paper and a webcast presenting it are available free of charge and without registration – something rarely seen these days). The bad things: they fail to give convincing explanations for the discrepancies between results of browsers using the same data source. To be more specific:

- Firefox, Chrome and Safari all consume the Google Safe Browsing API, so their results should be identical. The one possible explanation they raised (the different storage format employed) doesn’t sound credible. A more probable explanation would be the different update-interval of the products, however this aspect wasn’t analyzed.

- Given that IE7 does not contain any filtering technologies, any "prevention" by it must signal an error with the sample. As this test specifically ignored (and actively filtered out) exploits, the argument can not be made that the site contained an exploit for Firefox 3 but not for IE7 for example. I repeat: any URL "filtered" by IE7 (and there are around 5%) signals a discrepancy.

- The fact that they had stability issues with Google Chrome and Apple Safari raises some question about their setup (for example they used some kind of whitelisting / application lock-down software, which might have been the cause of the instability (again, because exploits were explicitly filtered out, they can’t be the source of the instability).

- The fact that they mention product updates in the document ("However, some browsers, including Google Chrome and Safari attempted to update themselves automatically without any user permission. This caused problems for our testing harness, which were eventually overcome during pre-testing") means that there is the possibility that for some products the versions used in the test weren’t the latest version.

- Tests performed by Virus Bulletin: these are performed on a smaller sample set. Specifically, they collect samples from members and consider only the ones which they obtained from more than member. The disadvantage of this method is that a member can flood VB with samples and have a much bigger probability of having “collisions” (common samples) with other members, skewing the results in their favor. The counter arguments of course is that members have an equal opportunity to “flood”, and if all of them would send larger sets of samples, the over-all quality of the test would improve. This doesn’t happen currently however. VB started recently to experiment with retroactive testing (see their methodology – free registration required, or use BugMeNot) but the first public test-run contained some surprising results which probably indicates problems with their sample selection methodology (or maybe the sample-set size): in almost all the cases the detection rate increased with the freshness of the malware. One would expect a decrease in detection, given that the signatures of the products were not update.

Is there a conclusion to all of these ramblings? I guess, just the obvious: to create a good and trustworthy test is hard. Not enough respect is given to the testers and there isn’t enough scrutiny by the public and people with knowledge involving the tests. As always, take test results with a grain of salt.

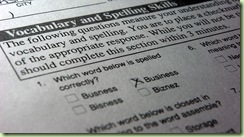

Picuture taken from cheese roc’s photostream with permission.